A common definition for artificial intelligence is to think as a human, or more concretely:

[The automation of] activities that we associate with human thinking, activities such as decision-making, problem solving, learning…

(Bellman, 1978) 1

Although this definition does not mention it, in the context of human thinking the notions of consciousness and self-awareness quickly arise. A typical argument is that artificial intelligence can only be called intelligence if it is aware of itself, or if it is conscious.

This argument is also used in one of the main criticisms of current large language models (LLMs) such as GPT. Namely, that the models are not able to introspect their own statements and can therefore confidently make claims that are wrong, although the LLMs would contain enough knowledge to detect the lie they are telling.

The Internal Monologue

Consciousness is fuzzy topic, and therefore considered as thin ice by computer scientists and other engineers and scientists. Yet, they may extract a few ideas that can be formulated in concrete terms, especially now, where abstract ideas like (the simulation of) thinking have received a technical incarnation with LLMs (as we describe in a prior blog post).

An early spark of consciousness has been reached with the “internal monologue”. The internal monologue allows an LLM to derive answers to questions it does not know the answer of directly, but by reasoning. Practically, it is achieved with prompt engineering to instruct an LLM to think in steps, as for example (taken from 2):

USER: A juggler can juggle 16 balls. Half of the balls are golf balls,

and half of the golf balls are blue. How many blue golf balls are

there?

A: Let’s think step by step.

---

(Output) There are 16 balls in total. Half of the balls are golf

balls. That means that there are 8 golf balls. Half of the golf balls

are blue. That means that there are 4 blue golf balls.

The results achieved using this internal monologue are already astonishing. Is this a precursor of artificial consciousness? Chances are that it alone is not, but that there is more to consciousness. The good news: there is a model!

A Model for Consciousness

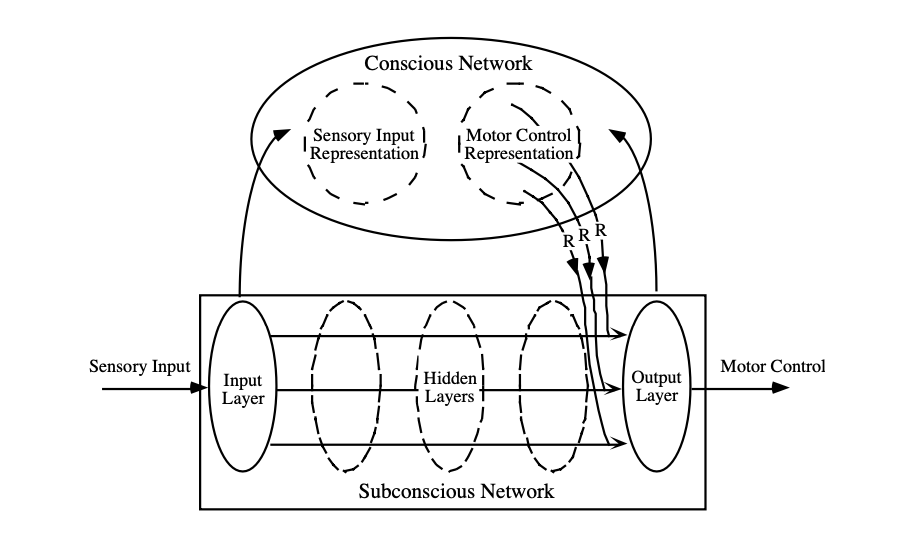

The following is a pragmatic model of consciousness, introduced by Bar-Yam 3. It is developed by recognizing that the mind knows about sensory information (e.g. we view things consciously), and motor information (e.g. we can move our hand consciously). Yet subconscious processes also clearly process the information (we move our hand instinctively to protect against a ball flying towards us).

The subconscious can therefore be represented in the model using one type of neural network - a feedforward neural network. Its strength is to to process vast amounts information, which is necessary when millions of sensory data points bombard us in every second.

A second, conscious network is realized by an attractor network (today better known as reservoir neural network). An attractor network does not have the data-processing prowess of a feedforward neural network, but allows for something the feedforward neural network cannot: control.

Bar-Yam’s model of the subconscious and the conscious (taken from Dynamics of Complex Systems3).

Bar-Yam’s model of the subconscious and the conscious (taken from Dynamics of Complex Systems3).

Although the conscious network does not receive all incoming sensory information, it is compatible to the feedforward neural network and can reflect on actions the subconscious feedforward neural network takes: “what am I seeing?”, “should I react?”, or “was this reaction correct?” In this model, the conscious network can also be seen as a will that controls the impulses.

Comparing this model with the ideas of a prior blog post about strategy, the conscious network could be compared to the “strategy-module”. It compares the operations of the subconscious with a self-image, or specifically in the strategy case: it compares tactical options that the subconscious gives to strategy in form of the self-image and chooses the tactical option that is most compatible with the strategy.

The Conscious Hack for LLMs

Can we reconcile these ideas with the operation of current-day LLMs?

Let’s start with the technical implementation: at the core of LLMs there lie large (hence the first L of LLM) feedforward neural networks. Given an input sequence (the context), they yield a word that is likely to follow the input sequence.

Currently, this loop is rather simple: use a human-given input sequence, evaluate the likelihood of the word that follows and choose one of the top candidates. Then, concatenate the yielded word to the input sequence and use this as new input sequence and repeat.

Would it not be natural to replace this primitive loop by an attractor network? This would address several issues:

-

The (conscious) attractor network would be in charge to conduct an internal dialogue with the subconscious and check the logical soundness of the output to be produced. Compare this to the primitive feedback loop done currently, or the in-text instructions like “Let’s think step by step”.

-

The attractor network would not need to do the heavy lifting associated with estimating the next word. Instead, it compares the suggestions given to it from the feedforward neural network, much as we do with checking the plausibility of a sentence before saying it (at least most of the time).

-

When formulating an idea, although we know what to say (i.e. the strategy), we are not always aware which words we use to do so (i.e. the tactics). Note that you do not have to explicitly think about every next word you are saying, it comes intuitively.

Practical Considerations

A similar implementation of these ideas is already available with AutoGPT which uses instead of a conscious network another LLM. Although this enables some longer-term planning, it is prone to compounding error, questioning the use of a no-introspectioning LLM as the “conscious” network.

The relatively small context window and missing abstraction of information in LLMs may further preclude their use as “conscious” networks.

Attractor networks have recently gained more interest in form of reservoir neural networks and have several advantages towards feedforward neural networks, such as their ability to process periodic information, form loops and much lower complexity.

Conclusion

It is unlikely that an additional neural network (e.g. the consciousness hack) will suffice to explain the nature of consciousness. Yet, it is often a jury-rigged prototype that shows the feasibility of an idea. Think of the first airplane, the first smartphone, or even the first, tiny language models in the 1980s.

Furthermore, there are several good arguments to replace the hard-coded loop of current-day LLMs by a more sophisticated, yet theoretically plausible model. One of them is the informed internal monologue selecting tactics that are consistent with the large-scale strategy.

References

-

S.J. Russell, P. Norvig, Artificial Intelligence - A Modern Approach ↩

-

T. Kojima, S. Gu, M. Reid, Y. Matsuo, Y. Iwasawa, Large Language Models are Zero-Shot Reasoners ↩

-

Y. Bar-Yam, Dynamics of Complex Systems ↩ ↩2